All Watched Over By Machines of Loving Grace

Some early thoughts on the intersection of nuclear weapons and artificial intelligence.

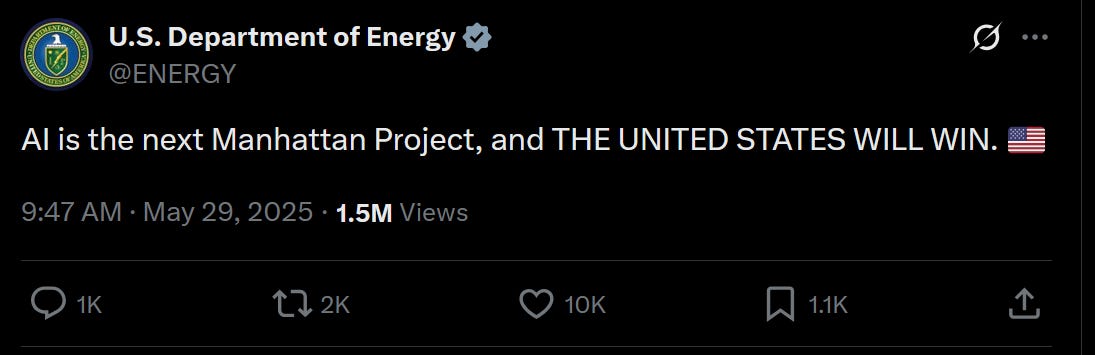

“AI is the next Manhattan Project, and the UNITED STATES WILL WIN.”

The Department of Energy—the part of the U.S. federal government which oversees its nuclear stockpile—posted that declaration on its X account the day after I learned I’d be an Outrider fellow. I'd spend the next two years reporting on the intersection of artificial intelligence and nuclear weapons and here was the DoE posting about my new beat.

It felt like a sign, but then the world has been lousy with signs lately.

In January, Los Alamos National Laboratory announced a partnership with OpenAI. The folks who built the atom bomb would load the AI company’s latest models into its supercomputer and use them to “preserve America’s security.”

Last year, Air Force General Anthony J. Cotton, the head of STRATCOM, spoke at a conference about the importance of integrating artificial intelligence with America’s nuclear arsenal. “Advanced AI and robust data analytics capabilities provide decision advantage and improve our deterrence posture. IT and AI superiority allows for a more effective integration of conventional and nuclear capabilities, strengthening deterrence,” Cotton said.

I have so many questions. What, exactly, does Cotton mean when he says AI? Are the people at Los Alamos using LLMs in defense work? Could AI be a force for good in the nuclear weapons space? Could it, for example, monitor ICBM silos or enrichment facilities as part of a treaty regime? What do China, Russia, and the other nuclear powers think of AI and nukes? Why is the Department of Energy—again, the nuke people—taking the lead in the Trump administration when it comes to selling the public on artificial intelligence?

The current nuclear regime relies on deterrence—the sure knowledge that if you launch a nuke at a country they’ll launch their own at you. What happens to that calculus when AI is added to the mix? Are the systems America plans to use as prone to hallucination as ChatGPT? What parts of the Golden Dome will run on AI? Was the Soviet Dead Hand system, technically, an early AI sytem?

I’ll spend the next two years working to answer those questions and more. I’m a freelance journalist, and the work will appear in several outlets but I’ll also publish updates, ephemera, and looser thoughts here on Angry Planet. It’s a great time to subscribe.

I promise to keep the War Games and Terminator references to a minimum. I could not, however, keep myself from tossing in a little Richard Brautigan. When I think about AI, and especially AI and nuclear weapons, his 1967 poem is the cultural reference that haunts me.

I like to think (and

the sooner the better!)

of a cybernetic meadow

where mammals and computers

live together in mutually

programming harmony

like pure water

touching clear sky.I like to think

(right now please!)

of a cybernetic forest

filled with pines and electronics

where deer stroll peacefully

past computers

as if they were flowers

with spinning blossoms.I like to think

(it has to be!)

of a cybernetic ecology

where we are free of our labors

and joined back to nature,

returned to our mammal

brothers and sisters,

and all watched over

by machines of loving grace.

My heart lives in the space where Brautigan’s bald-faced technological optimism smashes against the reality of the present moment. America had a dream of the future once that included flying cars and thinking machines and (it has to be!) an end to the threat of nuclear weapons.

The future arrived but the dream was poisoned. The thinking machines are all around us, but they’re are parrots and mirrors and not the vast alien silicon intelligence we imagined. There are fewer nuclear weapons in the world now than there were in Brautigan’s day, but more countries have them and a decades long trend of de-escalation has reversed course.

America, Russia, China, and Britain are all expanding their nuclear arsenals. Those same countries are building massive data centers and investing billions of dollars in artificial intelligence and promising innovations in everything from healthcare to national defense.

The old nuclear treaties are dead and new and terrible machines are here. It’s possible that we’ll all soon be watched over by machines of loving grace, robots whose love is enforced by the threat of nuclear annihilation.

I like to think (and the sooner the better!) it’s possible to build a world free of nuclear Armageddon. I’m skeptical that future involves the word calculators Silicon Valley is selling as AI, but I’ll hold off on making a final determination until I’ve gotten the answers to a lot of questions.